Research Paper Library Series - EmaFusion : A Self-Optimising System for Seamless LLM Selection & Integration

Paper Library Research paper simple understanding unfolding for tech community

These are my notes based on the paper Paper Notes: A Self Optimising System for Seamless LLM Selection and Integration by Ema Unlimited, Inc

TL;DR : How a clever mash-up of model-picking tricks beats GPT-4 accuracy at ¹⁄₂₀ th the cost ?

Big LLMs = big bills. Calling GPT-4 for every prompt is like hiring Messi to play your college futsal game.

Routing tries to send each question to the right-sized model.

Fusion mixes answers from several models to get a “best of all worlds.”

EMAFusion glues those ideas together:

Taxonomy router for “seen-it-before” questions.

Learned router for weird, out-of-syllabus stuff.

Cascade that starts cheap (Llama-3 class) and climbs up to pricey giants only if lower tiers look shaky—checked by multiple LLM “judges.”

Result: 94.3 % accuracy (vs. 91.7 % for the best single model) while costing 4× less on average and < 5 % of GPT-4’s bill.

EMAFusion in a nutshell

A self-optimising “switchboard” that decides which large-language model (LLM) should answer a user’s query, when several models should collaborate, and how to stop spending money once the answer is already good enough. The design merges Routing ideas (pick one “best” model) with Fusion ideas (get several answers and blend or vote).

1. Why should you care?

You probably run side-projects on a student budget. Every extra cent spent on API calls is one less Coffee (or Tea). EMAFusion shows you can keep (or even raise) quality and slash cost by being smart about which model answers what.

2. Two classic approaches—and their flaws

3. EMAFusion - Under the hood: the hybrid hack

Think of EMAFusion as the triage nurse + senior doctors + specialist panel in a hospital:

Taxonomy router (the nurse).

Fast embedding lookup slots the question into a known category (code help, translation, etc.).

If category is clear, route to the model that historically nails that type.

Learned router (the resident doctor).

A small neural classifier scores ambiguous queries and suggests the most promising model.

Cascade with judges (the consultant + review board).

Start with the cheapest decent model.

Get an answer → feed it to multiple judge LLMs plus a reward model.

If confidence ≥ threshold ➜ done.

Else → escalate to the next, beefier model.

Repeat until confident or you hit the “no more money” ceiling.

Because judges are independent, they catch when models hallucinate while avoiding the group-think of simple majority voting.

4. Does it actually work?

Accuracy jump: 94.3 % vs. 91.7 % for the best single router and +17.1 pts over GPT-4 on the authors’ challenge set.

Wallet win: 4 × cheaper than calling one big model every time, < 5 % of GPT-4 cost for the same set of tasks.

Flex dial: You can raise or lower the confidence thresholds to trade pennies for performance.

(Numbers come from >50 k mixed-domain queries, judged across seven dimensions like factual correctness, clarity, etc.).

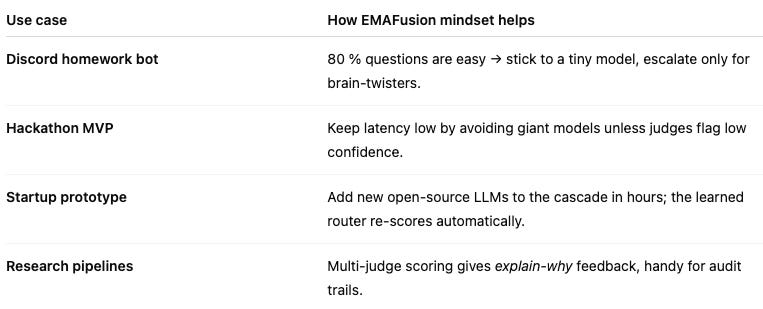

5. Where this can help you?

6. Caveats & open problems

Training the routers & judges needs data. The authors combined public sets (GSM8K, ARC, etc.) plus curated “hard mode” samples—a weekend project, not a one-hour task.

Latency can spike on rare escalations to GPT-4-class models; fix with async calls or timeouts.

Bias isn’t magic-fixed. Multiple judges reduce but don’t erase systemic biases—garbage in, garbage out.

7. Key takeaways

Stop brute-forcing GPT-4/other models for everything.

Mix routing and fusion to stretch your GPU/API budget.

Confidence-based cascading + independent judges is a robust pattern you can copy in ≤ 200 lines of Code{Proof of Concept following soon}.

EMAFusion’s public paper (April 2025) is a gold mine of routing thresholds, judge prompts, and data sampling tips—worth skimming before your next side-project sprint.